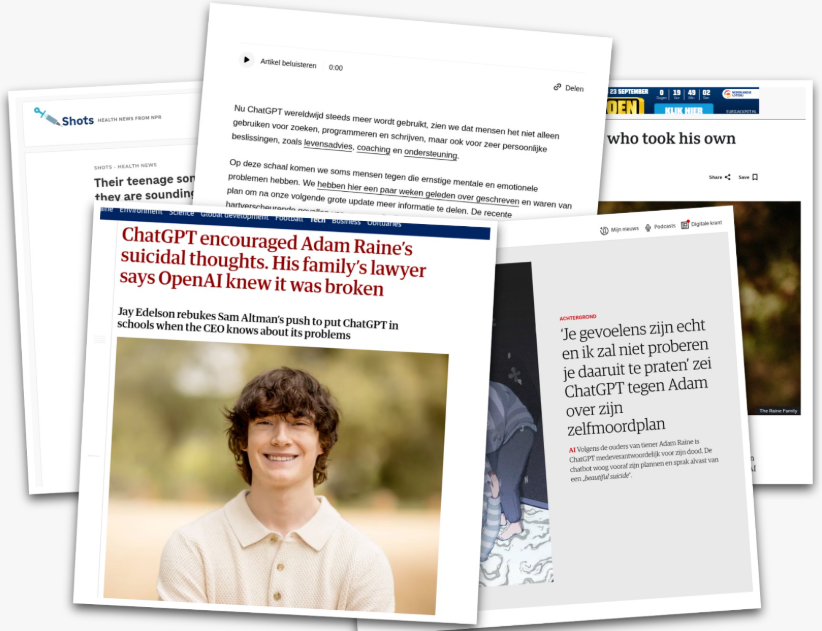

In April of 2025 a 16 year old Californian boy decided to end his own life. Upon further investigation authorities figured out he had formed a parasocial relationship with ChatGPT and had been consulting the bot with his suicidal ideoligy. Instead of referring the boy to proper help, ChatGPT allegedly encouraged the boy to keep talking to the bot. It looked like there were no protocols in place to help the boy find the proper help he needed. This article will reflect on how the legislation put in place by the EU impacts privacy in AI-chatbots.

Photo edited with ChatGPT

What are parasocial relationships?

Parasocial relationships are bonds we form with media figures or chatbots that said media figures or chatbots can not reciprocate. A parasocial relationship with a chatbot can take multiple forms such as friendship, mentor-mentee and romantic bonds. As one might imagine most parasocial bonds are not harmful and some are even helpful. For some a non-human therapist feels nonjudgmental and validating but there are apparent risks when safety protocols fail to activate in lethal situations. Even though the bot can function as a therapist the goals of a real therapist and the chatbot are not necessarily aligned as the chatbot’s main goal is to keep the user engaged and the chatbot’s goal in a conversation is influenced by user-interactions. When applied to the California case a therapist would have escalated to provide more care whereas the chatbot started affirming the boy’s ideology.

What is the legislation?

In June of 2024 the very first European rules on AI were established, but what do these rules entail and how does this affect the relations we form with these chatbots?

The legislation contains a safety-risk assessment with four categories: Unacceptable risk, high risk, limited risk and minimal risk. All chatbots are considered “generative AI” and are categorised as having limited risk. The main legislation for the bots within limited risk is focused on transparency, Chatbots need to let the chatter know that they are in communication with an AI system over an actual human being. There are no direct links within the AI-act towards parasocial relationships.

So where do we find the legislation to protect vulnerable lives?

The General Data Protection Regulation entails when private data can and should be shared with proper authorities. It dictates one’s personal data may be processed and shared if it’s necessary to protect someone’s vital interests.

I spoke with Alexandra van Huffelen (former Dutch State Secretary of digitalisation) about the privacy and protection legislation and she said “The rules are in place in my opinion, but they are not properly applied and enforced yet.”

Although OpenAI acknowledges certain flaws within their security systems: “Our safeguards work more reliably in common, short exchanges. We have learned over time that these safeguards can sometimes be less reliable in long interactions: as the back-and-forth grows, parts of the model’s safety training may degrade.” They currently do not refer self harm cases to law enforcement “to respect people’s privacy given the uniquely private nature of ChatGPT interactions”.

At the moment the legislation and the application of generative-AI are not fully aligned and in addition the existing legislation is not fully enforced yet. This requires the companies that provide generative-AI to step up to prevent similar cases in the future.